19% Moon on May 13, 2024

I shot the May 13, 2024 Moon at 19% phase (nearly first quarter). The idea was to try both the "lucky imaging" approach as well as some custom written Python code for aligning and stacking the frames.

I shot the May 13, 2024 Moon at 19% phase (nearly first quarter). The idea was to try both the "lucky imaging" approach as well as some custom written Python code for aligning and stacking the frames.

I gather that the idea behind "lucky imaging" is to stack only the best of the best frames, which could be as few as 1% of all captured frames. I've done multiple exposure stacking before, but I don't believe I've intentionally shot many many frames with the goal of using only highest-quality top 1% of them.

Below is the final image. It's a stack of the 59 frames, which were the best 5% of 1,190. I used a Canon EOS R6 and Sigma 150-600mm Contemporary with 1.4x. All frames were shot handheld at 840mm, at f/9, at ISO 400 at 1/125th of a second. (Click to see full-size):

I did stacks of the best 12 frames, 24 frames, and 59 frames (the top 1%, 2%, and 5% respectively) and I thought the stack of 59 frames was the best balance of noise reduction and detail.

The CR3 files were converted to PNGs using Darktable, then I used some Python code I wrote myself for frame quality calculation, brightness normalization, alignment with 3x drizzle, and stacking. I then used AstroSurface for its wavelet and deconvolution tools. Photoshop's "bicubic sharper" resampling algorithm was used to reduce the 3x image down to 1.5x. Finally, I did some sharpening, cropping, and other adjustments in Lightroom Classic before exporting as an 8-bit JPEG.

AstroSurface was a new tool for me. It's free (not for commercial use) software in active development (as of early 2024) that replaces the older RegiStax 6 software I was using for wavelet sharpening. At the time of writing I do see what looks like a beta of the new "waveSharp 1.0" is available, so perhaps that will compete with AstroSurface for updated wavelet sharpening software.

AstroSurface ran very nicely in wine64 on my Ubuntu Linux machine, and handled the relatively large 3x drizzle images (10,221 pixels wide by 6,813 pixels high, at 16 bits per channel) just fine.

My custom Python code performed a "drizzle" alignment by first repeating each pixel n times (in this case, 3) to scale the image by 3x in each dimension. After doing that, and aligning with some arbitrary rotation and full pixel translations, the idea was to find some valid data "between" the pixels in any given single frame. For stacking, I implemented a crude sigma clipping algorithm that finds the median for each pixel among the stacked images, then throws away any outliers beyond n standard deviations (2.0 in this case), and finally takes the median of the remaining values for each pixel. As this median process was done for each R/G/B channel, I then had to find which image contained an actual pixel closest to this hypothetical "medians pixel" among all three channels. The image scaling and stacking was brutally slow in my Python code (taking about a full day to run) but it appears to have potentially worked.

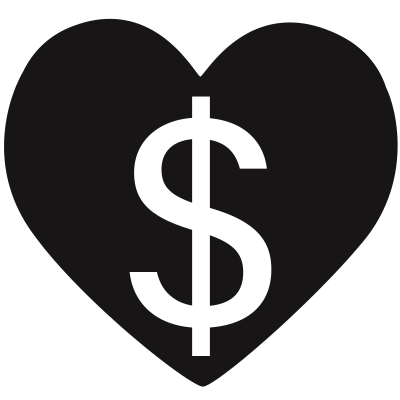

Below is a comparison between the best single frame (on the left) and the final image resized back down to 1x, where both are zoomed in a bit:

Even when resized back down to original size, you can see that the stacked image (the one on the right) retains more detail especially in the more distant parts of the Moon visible toward the edge of the lunar disc.

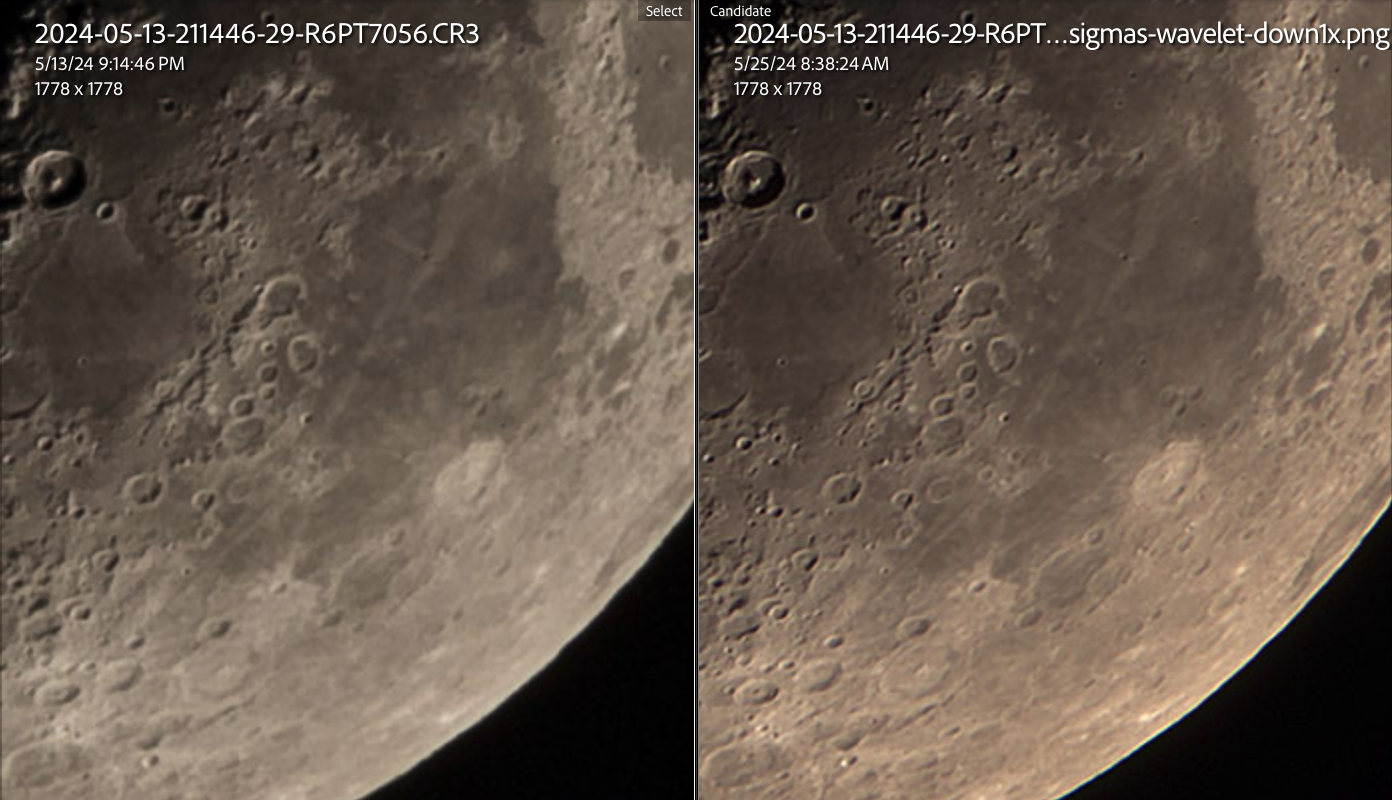

If we blow up the original to 1.5x (again on the left) and compare that to the 3x downsized to 1.5x (on the right) we can see quite a bit more detail in the right side's stacked image:

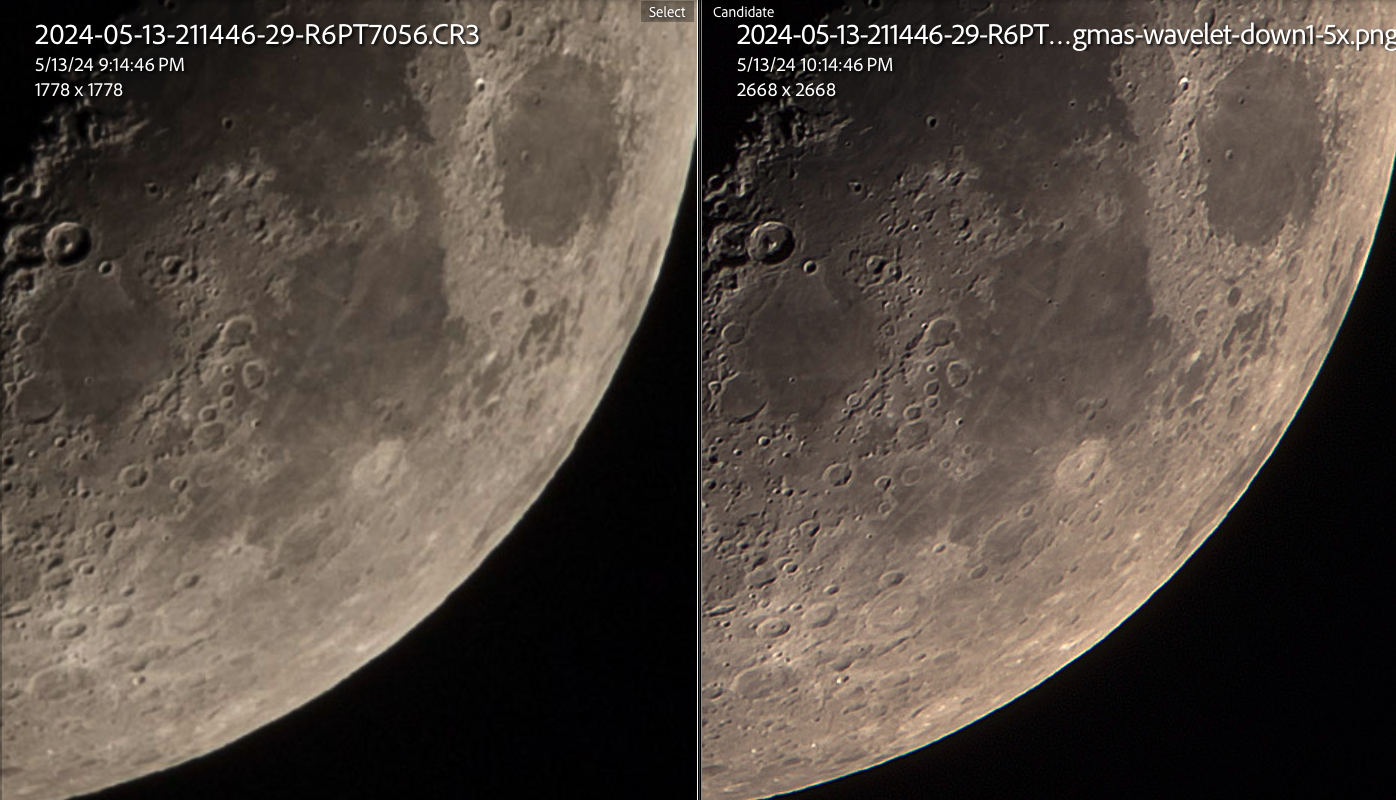

And finally, if we blow up the original to 3x (on the left) and compare that to the full size 3x drizzle image, we see that the 3x image on the right has lost a lot of apparent image quality but still shows a lot more fine detail:

In summary, this was a great little project to exercise my Python code for aligning and stacking, and for testing AstroSurface for wavelet sharpening.

Thumbnails and signature info were published to this gallery page.

I also published the main image above, and some of the background information, to my AstroBin page.